The National Museum of Computing

You know me and technology - it's kind of my thing. A few weeks ago, a friend who shares that interest came to visit. Since I’m not the type to just stay home, heading to The National Museum of Computing felt like the perfect plan.

We've set off early in the morning, as we planned to be there at 10:30am, when the Museum opens. After spending some time in traffic (what's a road trip without road works) we've arrived shortly after that. Greeted by the helpful receptionist, who explained what can we expect to see and gave us the map which we've referenced many times during the visit.

The museum, which is considered the world's first purpose-built computer centre, is located in Block H on the famous Bletchley Park grounds. The Park itself played a crucial role in World War II as the top-secret site for Allied codebreaking. If you’ve seen The Imitation Game, you’ll know this is where Alan Turing and his team worked to break the German Enigma code, drastically shortening the war. But it wasn’t just Turing – Bletchley was home to a vast team of mathematicians, engineers, and linguists, all working in secrecy to crack enemy communications. At the time the German military relied on encrypted messages to coordinate their attacks, move supplies, and relay orders. If the Allies could read those messages, they could anticipate movements, disrupt operations, and gain a crucial advantage. The work done at Bletchley directly contributed to shortening the war and saving millions of lives.

Enigma: A Million Million Million Possibilities

We've started with the Enigma machine and the Bombe. Getting there right after the opening meant we were just in time for the presentation. The museum has a fully working, made according to the original specifications Enigma machine - built in Germany. Hearing about it I must admit, the numbers are mind-boggling: it had 158,962,555,217,826,360,000 possible settings. As we haven't had computers at the time it’s easy to see why the Germans thought it was unbreakable.

Of course, it wasn’t. First, we start with the Polish Bomba. In the 1930s, Marian Rejewski, along with fellow mathematicians Jerzy Różycki and Henryk Zygalski, managed to reverse-engineer the workings of the Enigma machine using clever mathematics and some manuals intercepted by the polish intelligence. Their work laid the foundation for everything that came after. They even built the first electromechanical device to help with decryption - the Bomba - which directly inspired Brits.

Enter the Bombe, a mechanical beast designed to break Enigma’s encryption faster and at scale. It wasn’t just Alan Turing who cracked the code – Gordon Welchman played a key role too, something that often goes under the radar. Without his contribution, the Bombe wouldn’t have been nearly as effective. I have to admit, I felt a bit of a geeky thrill standing next to a reconstruction of the machine that changed the course of history. The Bombe reconstruction is fully operational, and we were lucky enough to see it being turned on and completing a decryption. Watching it in action really put into perspective the sheer mechanical complexity involved and made me appreciate the incredible work and collaboration that went into cracking Enigma.

Lorenz SZ42: More Settings Than Atoms in the Universe

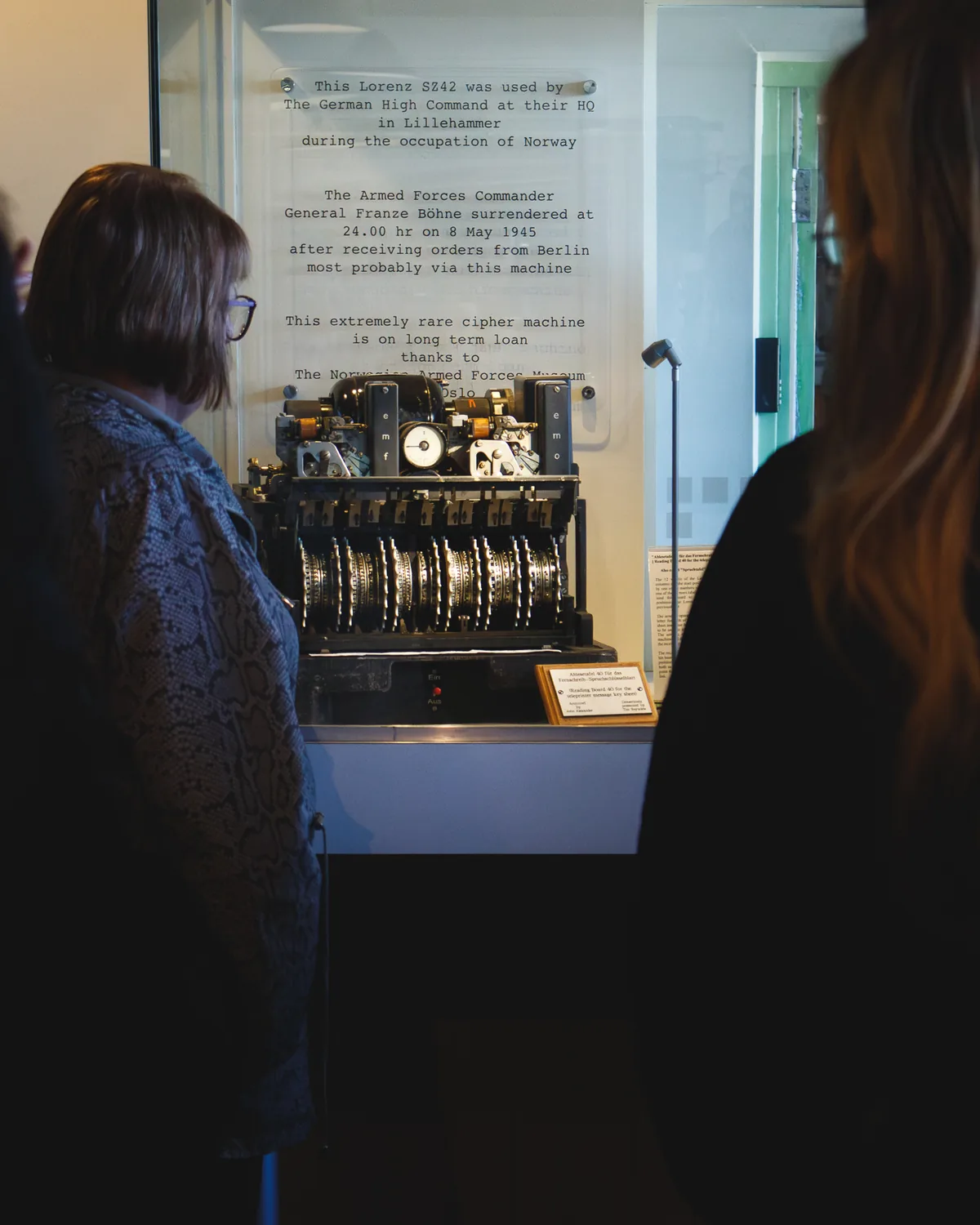

As we moved with the time, we kept increasing the complexity. Moving to the Tunny gallery, we saw the Lorenz SZ42, which made Enigma look like a child’s toy. While Enigma was used for battlefield communications, Lorenz was reserved for the highest-level strategic messages — including communications between Hitler and his generals. Breaking it required another level of genius. The sheer scale of possibilities was incomprehensible — it had more possible settings than atoms in the known universe. And yet, Bill Tutte, without even seeing a Lorenz machine, figured out how it worked just from analysing intercepted messages.

All of this was possible due to the human error. The same message was sent twice, on 30th of August 1941, because the first one was not received properly. However, against all rules, the operator used the same wheel settings on the SZ42, but used abbreviations in the second message, meaning that Colonel John Tiltman was able to break the code by hand in ten days.

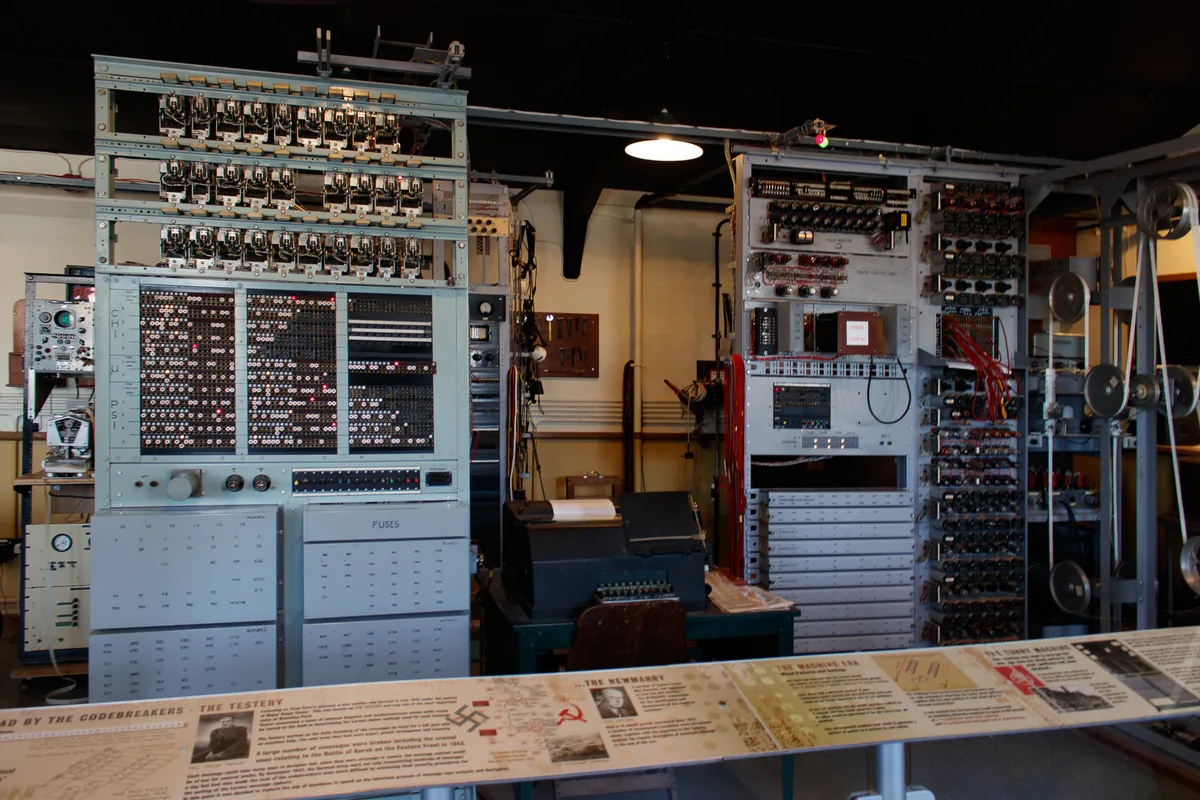

This was of course way too slow for a large-scale operation, which meant that machines needed to be built. This meant that the Heath Robinson machine was designed and used as an early attempt to automate the codebreaking. Unfortunately its limitations soon because apparent, as it operated on two paper tapes, which had to be perfectly synchronized. Since it was just a paper tape, it often broke when spined at speed in the wheels of the machine. This required many tape changes and high maintenance, which meant building more of them wasn't the best idea.

Colossus: The Machine That Wasn’t a Computer

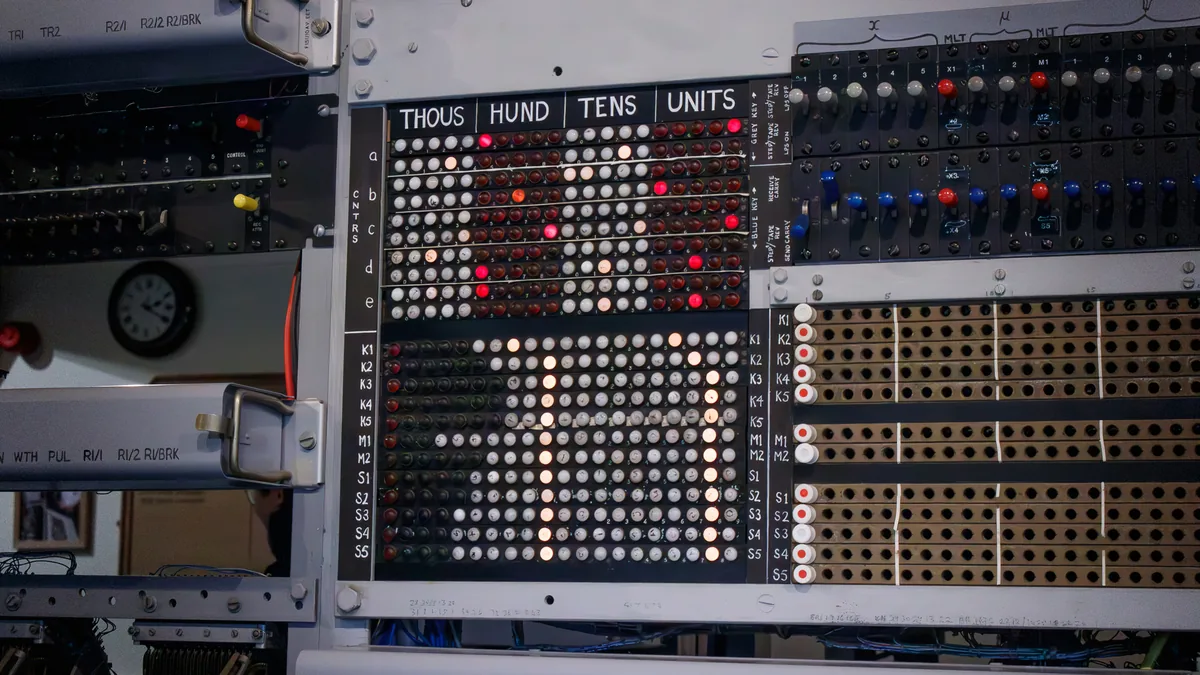

Finally, I found myself in front of the Colossus, the world’s first programmable electronic computer - or, well, sort of. It didn’t have stored programs like modern computers. Instead, it was wired by hand for specific tasks – like cracking Lorenz messages.

The first prototype took 45 minutes per message, but after some improvements (like switching to printed circuit boards), that was cut down to just 7.5 minutes. It used 8 kilowatts of power, which sounds like a lot, but it was running so fast that it was an equivalent of reading 21 copies of the Bible for every single message it decrypted (imagine a human doing that in seven and a half minutes). The intercepts were still input via paper tape, but everything else was electronic.

The best part? By the end of the war the Brits could find the current settings by lunchtime - that is despite Germans changing the settings daily!

The museum has a working replica of the original machine and we were able to see it in action. You could feel it's power – literally – the room's temperature has risen a few degrees after the machine has been operating for a few minutes.

Race to the Personal Computers

After the Colossus, we moved on, stepping into the era of truly programmable computers and eventually we saw the rise of personal computers. Even though we have skipped a bit due to some galleries being renovated, I was still struck by how quickly we transitioned from purpose-built machines designed for a single task to personal computers made for everyday people.

I mean - large companies had been using mainframes since 1950s and then in the late 1970s the computers were getting so affordable that the small businesses could start using them.

When the 1981 came, when IBM launched the Personal Computer (PC). This was a game-changer. Unlike the earlier systems, which often weren’t compatible with one another, IBM’s PC set a standard. Now, different brands of computers could exchange data and run the same software, laying the foundation for the computing world we know today. The introduction of operating systems like CP/M and later MS-DOS allowed businesses to run off-the-shelf software rather than relying on custom-built programs. This meant companies could finally use computers for accounting, word processing, and other essential tasks without needing a team of specialists.

Soon the computing made it's way to the homes – walking through the exhibits, I saw some of the machines that paved the way - the ZX Spectrum, BBC Micro, and Commodore 64 – the devices that brought programming to the masses. These machines weren’t just pieces of hardware; they were the gateways to learning, experimentation, and creativity for an entire generation. In just a few decades, we went from rooms full of specialized hardware to having powerful computers in our homes, and now in our pockets.

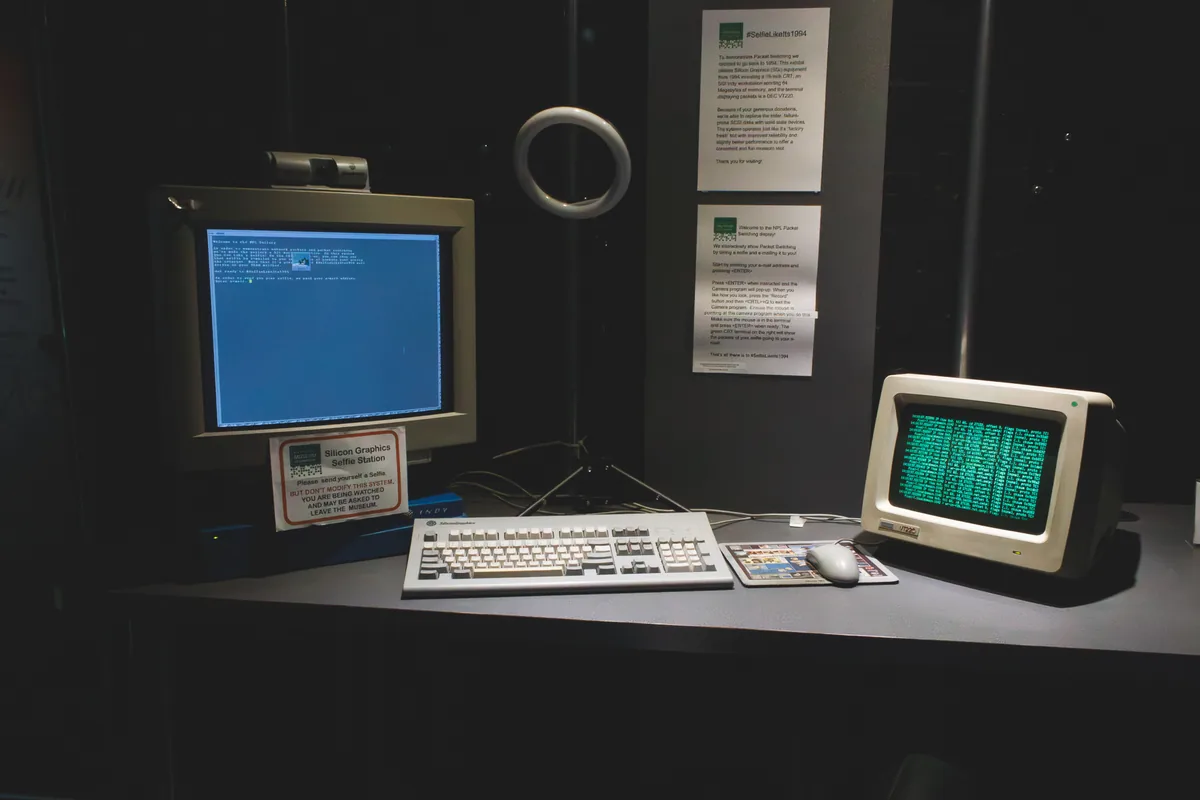

The Birth of Networking: Packet Switching and ARPANET

With personal computing on the rise, the next big challenge was networking. Computers were powerful, but they were still isolated machines, unable to communicate with each other efficiently.

Enter packet switching, a revolutionary idea that transformed how data could be sent across a network. Instead of sending entire messages in one piece, which could clog up the system and lead to delays, packet switching broke information into smaller chunks (packets). These packets could then travel separately across different routes and be reassembled at their destination, making communication faster and more reliable.

This concept laid the groundwork for ARPANET, the first large-scale implementation of packet switching and the direct ancestor of the modern internet. Initially connecting a few universities and research institutions, ARPANET proved that computers could share data over long distances. This breakthrough not only improved collaboration between researchers but also set the stage for what would eventually become the global internet we rely on today. It wasn’t long before businesses and eventually the public caught on, leading to the interconnected world we live in today. The speed of this progress is simply mind-blowing.

Reflections on the Past and Future

Seeing pieces of tech I actually owned or used back in the day. It’s strange to think that the gadgets I grew up with are now museum artifacts. I must admit that seeing the Commodore PET on display put a smile on my face. I’m not quite old enough to have owned one myself, but I recognized it immediately. Seeing some of my old phones (smart and dumb) and even a PC I once had were now museum exhibits though - that was a surreal moment.

Walking out, I felt both nostalgic and inspired. The work done at Bletchley Park didn’t just help win a war; it shaped the entire future of computing. It was a reminder of how much human ingenuity can achieve, even under extreme pressure.

Would I go back? Probably. If you have even the slightest interest in computers, cryptography, or just incredible stories of innovation, The National Museum of Computing is a must-visit.